AI has officially taken a seat at the healthcare M&A table. From accelerating due diligence to modeling integration outcomes, machine learning is now a fixture in the buy-side toolkit. But this is not a “plug it in and profit” scenario.

Acquirers need to ask harder questions. Can the AI actually deliver under regulatory pressure? Is the code base production-ready—or just stitched together by three freelancers? Does the target’s data license carry over post-close? And what happens when your clinical team flat-out refuses to use the tool you just spent eight figures on?

This article unpacks what can go wrong—and what you need to do differently—when artificial intelligence is part of the deal.

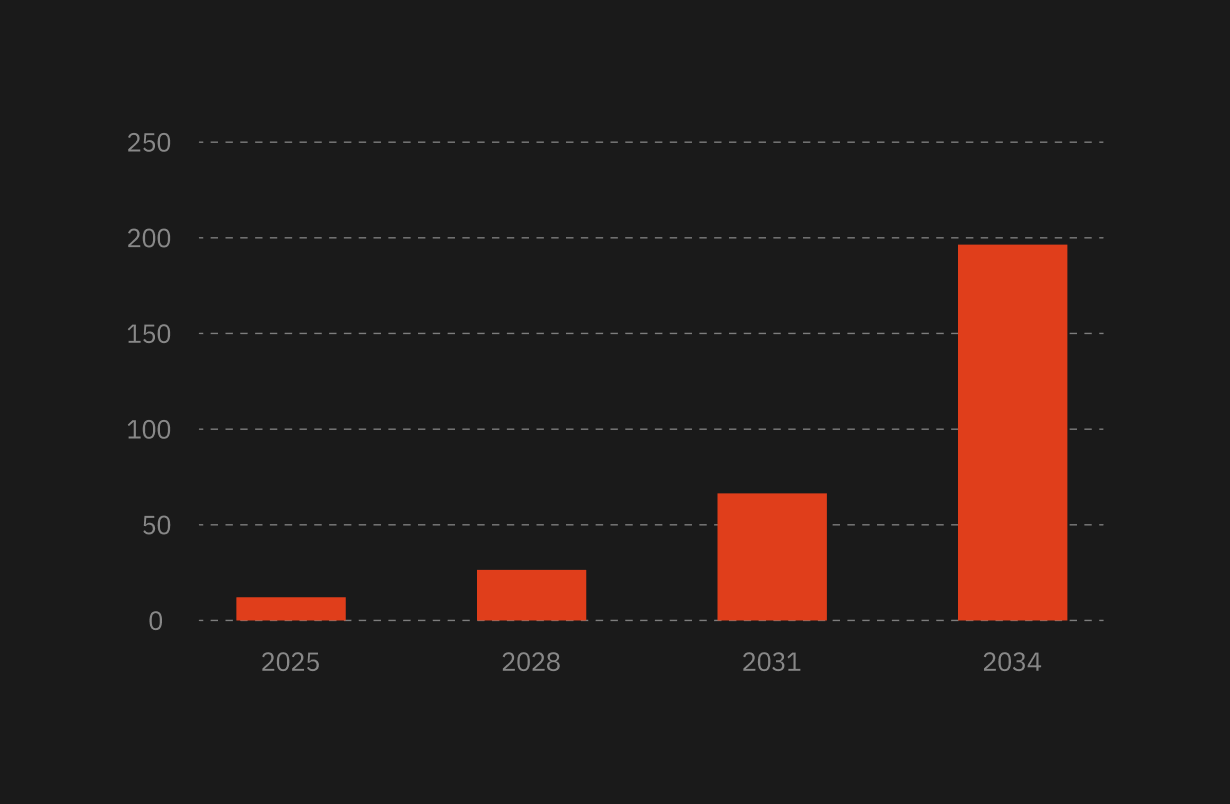

Why AI Is Now a Fixture in Healthcare M&A

AI Tools Are Accelerating Due Diligence—But Not Without Risk

Private equity and strategic buyers are using AI to compress diligence timelines. Automated tools can scan contracts, flag anomalies in claims data, and identify unusual billing patterns in days instead of weeks. But faster doesn’t mean safer. These tools only surface what they’ve been trained to recognize. Critical nuances—like undocumented data usage or half-built integrations—can slip through.

Buyers Are Using AI to Spot Operational Inefficiencies

Beyond diligence, AI is helping acquirers model synergies. NLP engines extract cost-saving opportunities from clinical workflows. Predictive tools project how quickly you can rationalize staffing or consolidate redundant infrastructure. But all forecasts depend on data quality. Garbage in, garbage out still applies.

Predictive Modeling Is Shaping Post-Deal Integration

Some buyers are going further—using AI to simulate post-deal value creation. For example, modeling how combining patient datasets could improve diagnostic accuracy or clinical trial matching. The upside is real. So are the compliance and operational risks.

AI Startups: High Valuations, Higher Risks

Risks Hiding in the Code

Some healthcare AI startups aren’t built for scale. They’ve raised fast, shipped prototypes, and made promises based on pilot data. But under the hood, it’s often technical debt, brittle infrastructure, and undocumented workflows.

Buyers need to audit the actual codebase—not just the pitch deck. Who wrote it? How was it tested? Can it be maintained and scaled under your infrastructure? If the founding CTO walks after the earn-out, will anyone know how it works?

AI-Driven Diligence: Speed vs. Substance

Relying on AI to evaluate AI is not a safety net. Algorithms trained on legacy transaction data might miss entirely new risk vectors—like use of non-compliant data, weak consent management, or biased training sets. Human review still matters. So does challenging the assumptions baked into the AI’s conclusions.

AI Vendor Risk and Data Compliance Red Flags

Many AI startups rely on third-party APIs, cloud models, or off-the-shelf training sets. That introduces exposure. You may be acquiring a system that depends on someone else’s intellectual property or violates your compliance policies. Check the licenses. Confirm patient consent. Know which cloud regions data is stored in—and whether that aligns with HIPAA, GDPR, or the AI Act.

What’s Breaking Post-Acquisition

Cultural Clashes Between Engineers and Clinicians

Tech teams move fast. Healthcare doesn’t. When AI engineers who “push to prod” weekly meet clinical teams who require peer-reviewed validation, integration grinds to a halt. Clinicians may reject tools they don’t trust. Engineers may burn out trying to retrofit workflows they don’t understand. This is not a technical problem—it’s an organizational one.

Integration Failures in Health Tech Acquisitions

EHR incompatibility, siloed data lakes, nonstandard ontologies—healthcare M&A rarely involves clean, simple systems. Merging AI systems adds more friction: mismatched data schemas, proprietary pipelines, and deployment environments that don’t talk to each other. In the best case, this delays time to value. In the worst case, it breaks live systems.

AI Startups Struggling With Scale

AI tools that perform well on 10,000 patients may not hold up across a national health system. Edge cases balloon. Model drift accelerates. Training costs spike. Compute costs go through the roof. If the startup’s entire infrastructure depends on a dev environment with no access control or observability—you’re inheriting a liability, not a product.

What Smart Buyers Are Doing Differently

Scrutinizing AI Like Any Other Regulated Tech

Don’t treat AI like a black box. Treat it like medical software. Ask for audit trails, performance benchmarks across demographics, and documentation of FDA clearances or CE markings. If it’s a clinical tool, confirm the intended use and regulatory status. If it’s analytics, check how the output is validated and used operationally.

Running Parallel Human + AI Assessments

The most experienced buyers don’t eliminate human diligence—they complement it. Legal, technical, and clinical teams still run full assessments while AI assists with prioritization. Think of the algorithm as a filter, not a final answer.

Stress-Testing Startup Claims With Sandbox Pilots

Don’t just review the AI’s demo. Run it in a sandbox with your data. See how it handles real noise, gaps, and edge cases. Set up test scenarios. Measure accuracy and performance under load. If the startup won’t cooperate, that’s your answer.

Building Integration Roadmaps That Include AI Literacy

AI isn’t plug-and-play. Clinicians and staff need to understand it to trust it. Smart buyers build training programs and usage guidelines into the integration plan—especially when AI outputs impact clinical decision-making. They also invest in internal AI governance teams to oversee model drift, fairness, and safety post-close.

The Fix-It Kit for AI-Powered Healthcare Deals

You can’t diligence your way out of a broken codebase, missing FDA clearance, or a clinical team that won’t touch your shiny new AI tool. But you can catch these messes early—if you’ve got the right gear. Here’s a quick look at tools that help you spot and reduce AI-related risk during M&A. Not all were built for M&A—but they’re useful when evaluating AI-heavy targets.

Conclusion: Use the AI. Don’t Get Used.

AI can deliver serious value in healthcare M&A—but only if you ask the hard questions. Is the code reliable? Is the data legal? Are the clinicians on board? Will the system scale? If the answer to any of these is no, the deal is a risk multiplier, not a growth lever.

Use the AI—but on your terms. Compress the timeline, sure. Surface risks, absolutely. Just don’t mistake a model for a mind. Know what you’re buying—and who’s going to be stuck scaling it.

.png)

.webp)