Machine learning (ML) have grown rapidly in recent years. While traditionally executed primarily on remote servers, ML is quickly becoming integral to modern web and mobile applications.

I’m a Senior Software Engineer at MEV with 13 years of experience in the industry. Recently, I had the opportunity to develop a web application utilizing machine learning. During this project, I uncovered a few tools and techniques that may help others go beyond classical programming to solve similar tasks, which I’ll cover below.

Problem Statement

One of the ML tasks in our project was implementing real-time object detection to generate a bounding box that acts as a safety perimeter around a specific area in a video stream. If breached, a notification would be triggered to alert the team.

Justification of Choice: Why we Chose TensorFlow.js

External AI Services Don’t Meet HIPAA Compliance

Because the device was required to comply with the HIPAA standard for processing and storing protected health information, we immediately ruled out external AI services (e.g., ChatGPT, Gemini, etc.) that do not meet this standard. Moreover, these services incur additional costs.

AI/ML as a Service (AIaaS) Has Practical Disadvantages

Popular cloud platforms offer services for working with ML, such as Amazon SageMaker, Azure Machine Learning, and Google Vertex AI. These platforms provide a wide range of tools for creating, training, and deploying ML models.

However, the main disadvantages of these systems are:

- Their cost (especially when using machines with GPUs)

- Latency in data transmission over the network

- The need to ensure the confidentiality of processed data

Hosting the Model on a Dedicated Server or FaaS Requires Additional Effort

In addition to the disadvantages noted above, additional performance issues occur when using a server without a GPU or FaaS (e.g., AWS Lambda). Moreover, this route would require developing an API with all necessary security measures.

Creating a Native Application Takes Time

Since we were dealing with WebOS, we had the opportunity to place the ML model directly on the device, develop a native service in C/C++ for inference and necessary data processing, and use it in the web application.

This approach has several advantages:

- Data Security: Data is not transmitted anywhere and is processed on the device

- Low Latency: Due to the absence of the need to transmit data over the network

- Ability to Use SoC Acceleration: (GPU, NPU, etc.)

In our case, the main obstacle to using this approach was the additional time required to learn and apply several new technologies, which we did not have due to release deadlines.

.png)

The Winner: Developing a Web Application With the Right Library

In this case, we get the same advantages as in the previous point (except for the direct use of SoC capabilities) and the speed of integrating ML functionality into the existing web application.

When searching for libraries to work with ML in web applications, TensorFlow.js emerged as the most attractive option.

What is TensorFlow.js?

TensorFlow.js is a JavaScript library developed by Google that allows developers to create, train, and execute machine learning models directly in the browser or on a server using Node.js. This library is part of the broader TensorFlow ecosystem.

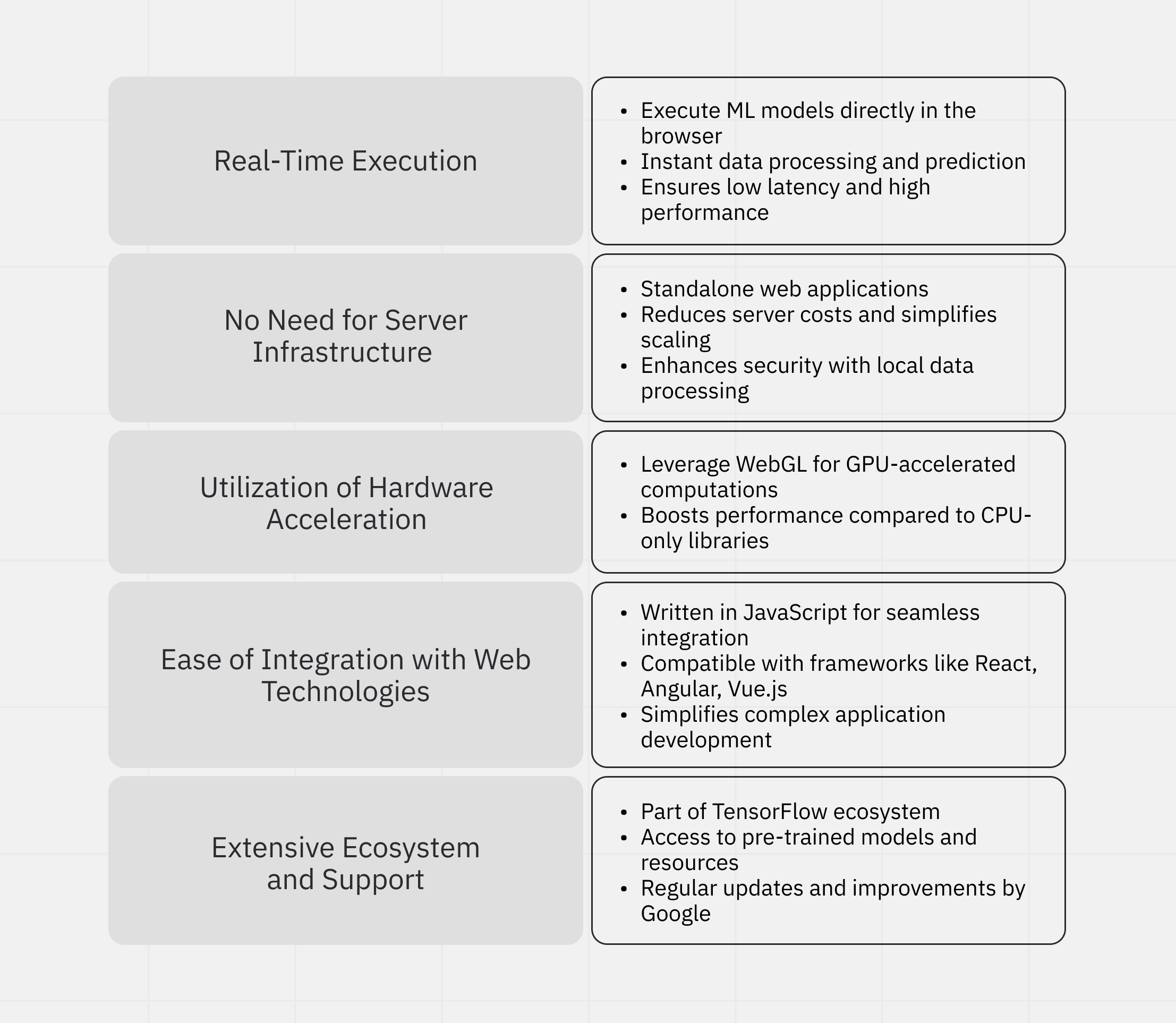

Advantages of TensorFlow.js for Web Applications

- Real-Time Execution

One of TensorFlow.js's main advantages is the ability to execute ML models directly in the browser in real-time. Data processing and prediction can happen instantly without contacting a server. This approach ensures low latency and high performance, which is critical for many interactive web applications. - No Need for Server Infrastructure

With TensorFlow.js, developers can create standalone web applications that do not require server infrastructure for machine learning processing. This reduces server costs and simplifies application scaling. Additionally, it enhances security since sensitive user data can be processed locally without leaving the user’s device. - Utilization of Hardware Acceleration

TensorFlow.js can leverage hardware acceleration through WebGL, allowing complex computations to be performed efficiently on the GPU. This significantly boosts performance compared to other libraries that may be limited to CPU only. - Ease of Integration with Web Technologies

Since TensorFlow.js is written in JavaScript, it integrates easily with existing web technologies and frameworks such as React, Angular, or Vue.js. This seamless integration simplifies the development of complex applications, reducing the time and effort required for ML integration. - Extensive Ecosystem and Support

TensorFlow.js is part of the TensorFlow ecosystem, providing access to many pre-trained models, tools, and resources. Additionally, Google actively supports and develops this project, ensuring regular updates and improvements.

An additional advantage of using the TensorFlow ecosystem is the availability of tools for converting models to the TensorFlow.js and TensorFlow Lite formats (notably, TensorFlow Lite models can be used in web applications in addition to mobile devices). However, it's important to note that not all functions available in TensorFlow can be successfully converted to another format. Therefore, it is necessary to check for compatibility and make appropriate changes or choose another model.

Challenges in Using TensorFlow.js for Model Training

TensorFlow.js allows developers to use a single programming language for both model training and execution. This presents an advantage because web developers can potentially develop and maintain the product without involving ML engineers. However, the reality is that machine learning is a niche field, and not every web developer has the expertise to leverage it. Therefore, involving qualified ML engineers is generally unavoidable.

That said, because the most popular machine learning tools and libraries predominantly use Python, finding an ML engineer with sufficient JavaScript expertise and TensorFlow.js can be difficult. Although TensorFlow.js API and TensorFlow API (Python) have similarities, understanding the differences and finding equivalent functions may require additional time and effort.

Another Reason to Avoid Using TensorFlow.js for Model Training

Another reason to avoid using TensorFlow.js for model training is the need for more ready-made examples in JavaScript. In these cases, using an existing solution rather than writing code from scratch is much faster and more convenient. However, this advantage only applies to the model training stage. When it comes to deployment, the differences between Python and JavaScript code become an issue again.

Since model data often requires preprocessing and post-processing, the amount of code that needs to be converted from Python to JavaScript can be quite substantial. This requires developers proficient in all relevant technologies (ML, Python, JavaScript, TensorFlow, TensorFlow.js, etc.). Therefore, it may be more practical to spend time training an ML engineer in JavaScript technologies rather than teaching a web developer ML.

Final Thoughts on Leveraging TensorFlow for Machine Learning

The TensorFlow ecosystem provides powerful tools for training and executing ML models on virtually any platform, offering extensive opportunities to apply machine learning to various use cases.

Compared to other libraries, TensorFlow.js's broad capabilities and enhanced performance make it the ideal library for machine learning web applications.

However, Tensorflow.js is a relatively young technology, so ready-made solutions for specific tasks may be limited and hard to find. Additionally, despite their similarities, TensorFlow and TensorFlow.js have enough differences to create additional development challenges, which is something to consider when forming development teams and timelines.

Is TensorFlow right for your next ML project? Chat with one of our experts for answers and insights.

.jpg)

.png)

.jpg)

.webp)

%20Let%20Complexity%20Kill%20Your%20Product_optimized.gif)

.gif)