Code reviews are how you scale quality, share knowledge, and earn trust. For mid and senior engineers, feedback isn’t just technical; it’s how you influence design, coach others, and build your reputation.

Done well, reviews accelerate learning and prevent costly mistakes. Done poorly, they create friction and slow teams down.

This guide is for engineers who want to sharpen their judgment, give better feedback, and help their teams move faster with fewer surprises. Code reviews are a craft – and strong ones start with knowing what good looks like, both in the code and in how you approach it.

We’ll walk through practical code review best practices, a real-world code review checklist, and proven code review guidelines that help teams move faster and grow stronger. Whether you're shaping your own pull request best practices or refining your team’s code review process, this guide is built to scale with your experience.

Let’s start there.

Before You Review: Setting the Groundwork

The quality of a code review often depends on what lands in front of the reviewer. If a pull request is bloated, unclear, or lacks context, even the best reviewer will struggle. That’s why good reviews start long before feedback is given – they start with how work is shaped and shared.

Break down changes into small, logical units. A “reviewable” PR usually touches one feature, fix, or refactor – not all three. Keep it under 300–400 lines when possible. Large PRs invite skimmed feedback, overlooked issues, and merge delays. Small, focused ones get thoughtful attention.

Use automation to help enforce this. CI tools or GitHub Actions can warn when PRs exceed reasonable thresholds, nudging authors to split early.

Strong code review practices start here. A well-structured PR makes the entire review process smoother, faster, and more reliable. Whether you follow a formal code review checklist or just apply your team’s guidelines consistently, the goal is the same: make it easy for reviewers to focus on what matters.

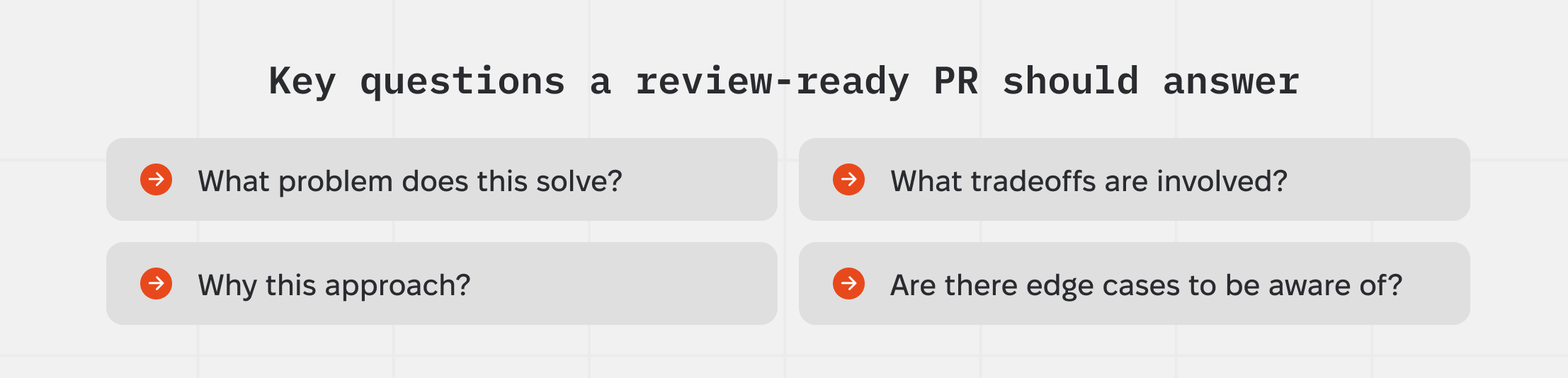

A review-ready PR tells a clear story:

- What problem does this solve?

- Why this approach?

- What tradeoffs are involved?

- Are there edge cases to be aware of?

Include links to related tickets, context for reviewers, and any relevant test or migration notes. If you had to explain this change in a Slack thread, that explanation should live in the PR description.

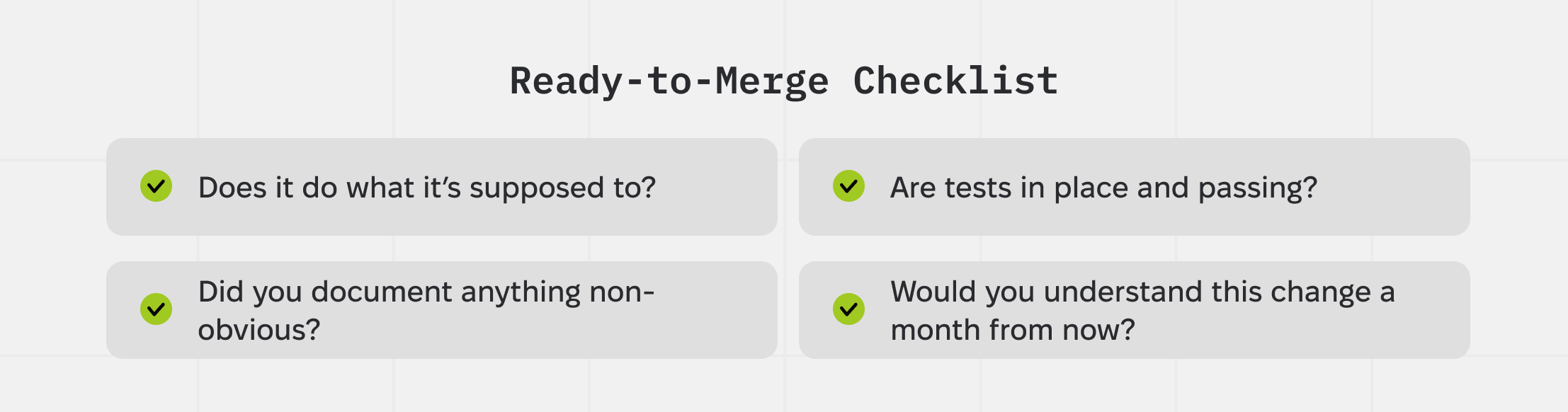

Finally, run a pre-flight check:

- Does it do what it’s supposed to?

- Are tests in place and passing?

- Did you document anything non-obvious?

- Would you understand this change a month from now?

These habits build trust in your work and keep reviews focused on meaningful improvement – not detective work.

What to Look For: The Real-World Review Checklist

Start with the basics: Does the code meet requirements? Functionality comes first – it should solve the right problem, not quietly introduce another.

Next, consider readability. Can another engineer step into this codebase and understand what’s going on? Clear names, simple logic, and modular structure often beat clever tricks.

Then, maintainability. Can this code evolve without causing breakage? Is logic reusable? Are the abstractions reasonable?

Don’t overlook performance. Any obvious bottlenecks? Blocking operations in the hot path? You don’t need to optimize everything – just flag the outliers.

On to security. Is untrusted input handled carefully? Are secrets exposed? Is auth logic consistent? Security issues are often subtle but serious – don’t skip them.

Then there’s testing. Do tests exist? Are they meaningful? Do they break if the code breaks?

Finally, documentation. Will someone looking at this code later know how to use it or what it changes? That could be in code comments, PR notes, migration steps, or a README update.

And step back – does this change fit the broader system? Is it consistent with existing patterns? Does it avoid architectural drift?

Using this checklist as part of your regular code review process helps ensure consistency and long-term quality.

Review Focus Areas

How Senior Engineers Review Code

Senior engineers shape systems and culture. A good review isn’t just about correctness – it’s about guiding decisions, mentoring others, and removing risk early.

Skim → Dive Deep → Final Pass

Start with a skim. What’s the intent of this change? Is the scope reasonable? Does it conceptually make sense?

Then dive deep. Focus on correctness, scalability, coupling, and edge cases. Look at what matters – not just what changed.

Finally, make a pass for polish: naming, readability, test completeness. Ask yourself: Would I want to maintain this?

Ask Questions, Don’t Dictate

Instead of “This is wrong,” ask:

- “What led you to this approach?”

- “How would this behave under X condition?”

- “Is this pattern consistent with how we’ve handled Y before?”

The phrasing matters. Questions invite collaboration. Dictates discourage participation and learning. Trust is built by curiosity, not control.

Use Tags to Signal Intent

Comments are easier to digest when you make priorities clear:

- issue: Must fix

- suggestion: Optional improvement

- nitpick: Minor style comment

- question: Clarification or intent

- praise: Acknowledge good work

It sets the tone and helps the author act with confidence.

Bring in Experts Early

If a PR touches high-risk areas – auth, infra, money – don’t go it alone. Tag a domain owner. Drop a Slack message. Flag a CODEOWNERS rule.

The right time to escalate is before it ships, not after something breaks.

Patterns to Avoid (That Still Happen All the Time)

Even experienced teams fall into these traps. They quietly damage quality and morale if left unaddressed.

Tools That Do the Boring Stuff For You

Strong engineers know what to offload. Let machines handle the trivial so humans can focus on what matters: clarity, design, and risk.

Linters, Formatters, and SAST: Automate the Obvious

Tools like ESLint, Prettier, Black, or Flake8 standardize style and catch simple issues. Run them in CI. Format on save. Don’t let a reviewer waste time on spacing or trailing commas.

SAST tools – like SonarQube, Semgrep, CodeQL, or Codacy – go deeper. They detect:

- Insecure patterns (e.g., open regexes, unsafe user input)

- Missed edge cases (e.g., unchecked values, nulls)

- Maintainability risks (e.g., excessive complexity)

Customize your rules to match your stack. And make sure they run before a human sees the code.

Pro tip: If your team keeps reviewing for the same types of errors, automate them. If the tool doesn’t exist, script it.

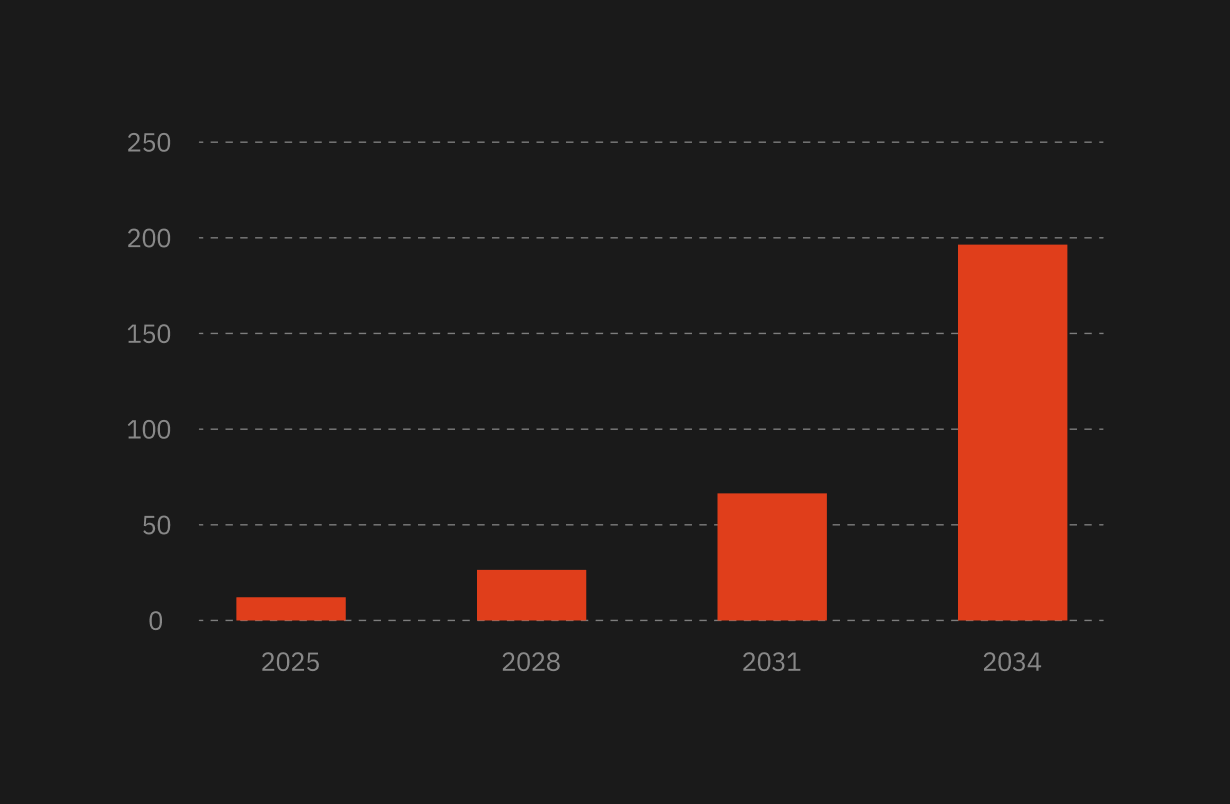

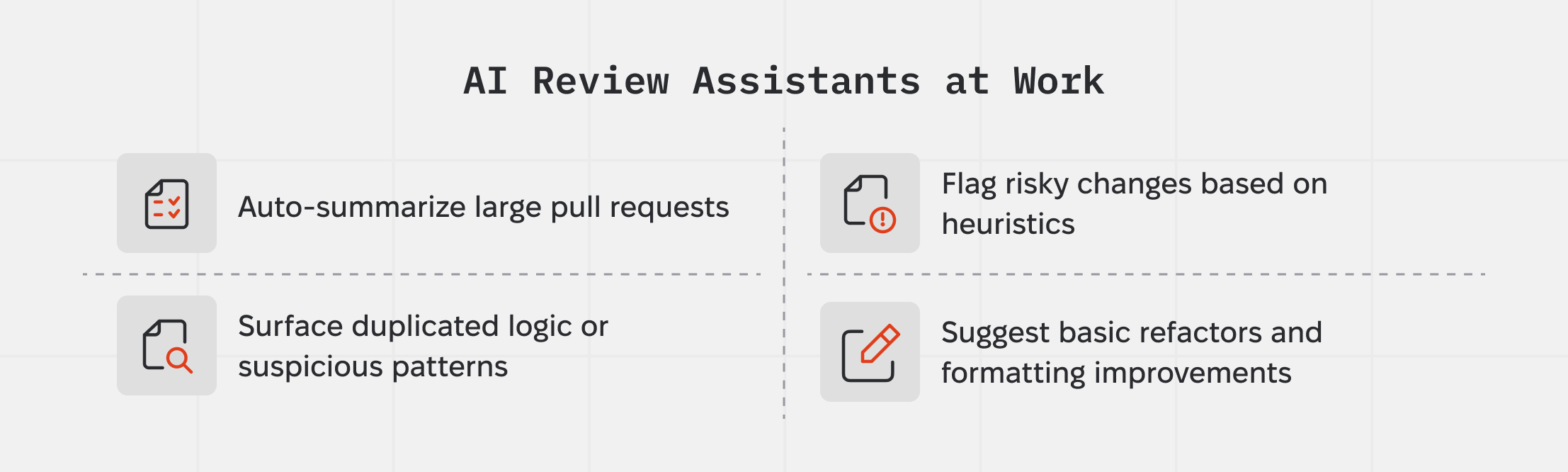

AI Review Assistants: What’s Real and What’s Noise

AI tools like GitHub Copilot Reviews, CodeRabbit, and CodiumAI are becoming increasingly common in the review pipeline. Used correctly, they can:

- Auto-summarize large pull requests

- Surface duplicated logic or suspicious patterns

- Flag risky changes based on heuristics

- Suggest basic refactors and formatting improvements

Used poorly, they generate false confidence and noise.

Treat AI as a junior dev: quick, occasionally helpful, but in constant need of supervision. Most AI models lack the context to assess architectural alignment, business logic, or nuanced tradeoffs. For example, it might suggest extracting a repeated block into a helper function – without recognizing that those two blocks have different side effects under load.

In short: AI can help you move faster – but only if you stay in the driver’s seat.

Conclusion

Code reviews are how teams scale quality, build trust, and grow engineers. They reflect engineering maturity: how well we communicate, how quickly we learn, and how seriously we take ownership.

For mid and senior engineers, reviews are one of the clearest paths to leadership. The way you review – the clarity of your thinking, the tone you set, the problems you prevent – becomes part of your reputation.

So here’s the one habit to start today: Review like you own the system – and like you want the next person to succeed.

Everything else will follow.

.png)

.webp)