For nearly two decades, MLS data exchange relied on RETS — the Real Estate Transaction Standard. RETS gave the industry its first common framework for pulling property data at scale and was widely adopted across MLSs. Even today, some systems continue to run on it. But RETS was designed in an earlier era of data transfer. It depends on batch downloads, XML payloads, and vendor-specific implementations that made integrations slower and harder to maintain in a world moving toward web and cloud technologies.

RESO was created to take the next step. With the Data Dictionary and Web API, the promise was straightforward: a modern, web-friendly standard that speaks one language across MLSs, making integrations faster, cheaper, and easier to maintain.

In practice, the story is less tidy. Providers extend the dictionary with their own fields or maintain multiple versions in parallel. Consumers discover that even “compliant” feeds still require custom mappings, error-handling workarounds, and pagination fixes. Plug-and-play often turns into patch-and-fix.

That gap between the standard and real-world use shows up in the same places again and again. Let’s look at the four issues that keep RESO APIs from delivering on their full promise.

Issue 1: Data Model Extensions and Inconsistent Contracts

RESO’s Data Dictionary was meant to give the industry one common language — so that “Active” in one MLS means the same thing in another. In reality, it works more like a shared grammar with lots of local dialects. Providers extend the model with their own fields, hold on to legacy terms, or adopt new versions at different speeds. To the outside world, the data looks standardized; under the hood, every MLS has its own accent.

Provider perspective

From the provider’s side, these extensions are practical. They keep systems stable, allow MLSs to reflect local market quirks, and make sure high-volume feeds don’t break when standards change. The problem is that many of these fields aren’t documented in a way others can easily use, and version drift means two “RESO-compliant” APIs may still look quite different.

Consumer perspective

For consumers — brokerages, platforms, and vendors — this feels like chasing a moving target. The same status might show up as Active, A, or ACT. Local fields appear with no context. To make sense of it, consumers spend time (and money) building normalization layers just to line up multiple MLS feeds. The whole point of standardization — plug-and-play integration — is weakened by this inconsistency.

RESO gives us a solid modern framework, but the tricky part is that every MLS still implements it a bit differently. One feed might extend the Data Dictionary with local fields, another might return a 403 when you’ve actually hit a rate limit, and a third might use pagination tokens that expire too quickly. As developers, we end up writing extra logic for field mapping, smarter error handling, and pagination retries just to keep pipelines stable. The standard itself works — what we need now is more consistent usage so integrations behave the same way everywhere.

Alexey Kolokolov, Senior Software Engineer, PropTech Project

Recommendations

- Create a shared registry where all non-standard fields are published and documented.

- Tighten certification to check not only required fields, but also whether enumerations and formats are consistent.

- Push for clearer upgrade timelines so MLSs don’t run multiple versions indefinitely.

- Encourage providers to publish machine-readable field dictionaries to cut down on guesswork.

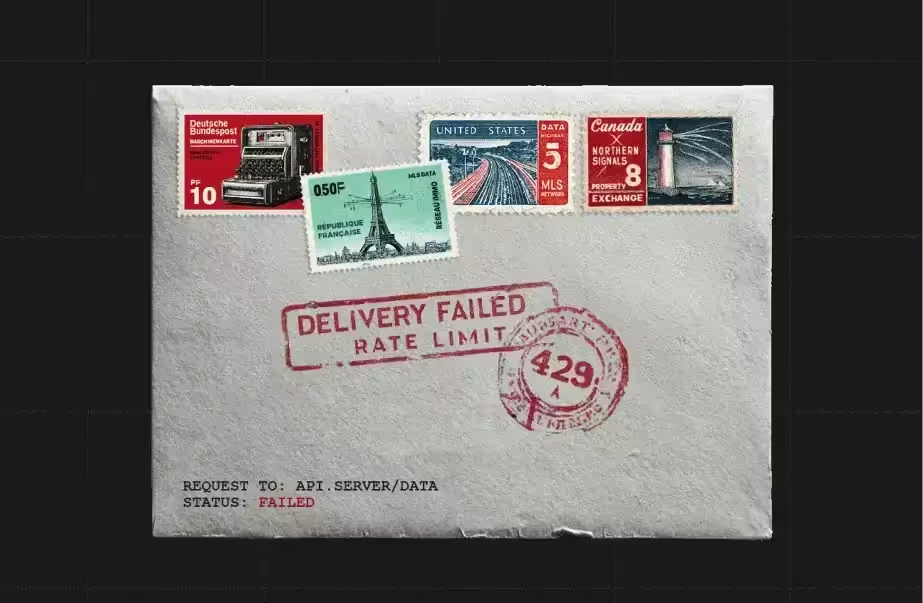

Issue 2: Unclear Error Models and Rate Limits

When systems hit an error, clarity is everything. But in RESO APIs, error messages often create more confusion than guidance. A broker’s tech team might see a “403 Forbidden” message and spend hours debugging credentials — only to discover they’ve simply tripped an invisible rate limit. Instead of helping, the system leaves both providers and consumers guessing.

Why providers do it

Providers keep error responses vague to protect internal infrastructure and reduce support overhead. Publishing exact quotas or detailed error models can feel risky — exposing too much about system internals. As a result, they lean on generic codes and undocumented thresholds, assuming most partners will “figure it out” as they go.

Why consumers struggle

On the other side, consumers are left in trial-and-error mode. Is the 403 about a bad token, or did the system just block them for hitting too many requests? How many calls per minute are safe before throttling kicks in? With no consistent model across MLSs, every integration requires detective work — costing time, money, and trust.

What would fix it

- Standardized error language: Require structured JSON error codes with clear types, details, and remediation steps.

- Transparent limits: Mandate use of HTTP 429 (“Too Many Requests”) with a Retry-After header instead of vague 403s.

- Published policies: Providers should disclose rate limits in docs or via a simple /limits endpoint, so consumers can plan instead of guess.

Issue 3: Server-Side Pagination Reliability

Pulling MLS data isn’t a one-click download — we’re talking about hundreds of thousands of records. To keep things manageable, APIs use pagination: breaking the dataset into smaller, sequential pages. In theory, this makes large transfers smooth. In practice, different implementations create gaps, duplicates, and frustration.

Why providers paginate

MLSs can’t just hand over millions of records in one go. To keep systems fast and reliable, providers break data into pages using tokens or cursors. The RESO spec gives them room to choose their own approach — but that flexibility leads to very different implementations across MLSs.

What consumers experience

For data consumers, those differences translate into headaches. Tokens may expire mid-sync, records get duplicated, or entire listings go missing. A dataset that looks “complete” on delivery often isn’t — forcing teams to restart syncs or build extra logic to patch the gaps.

The impact on both sides

Providers succeed in protecting throughput, but consumers lose confidence in the feeds. Vendors spend more on error handling and reprocessing, while providers deal with support escalations. Everyone pays a cost for inconsistency.

How to make it better

One way forward is for providers to support stable snapshots — freezing the dataset during pagination so that results don’t shift mid-process. Additionally, APIs should enable incremental updates, allowing consumers to query “since last modified” records instead of relying on fragile page tokens. Finally, RESO itself could step in with shared rules that define token validity and duplicate handling consistently across MLSs, giving everyone a predictable framework to work with.

Issue 4: Authentication and Authorization Variability

Authenticating to an MLS should be routine: request a token, call the API, refresh when needed. In reality, every provider does it differently. One MLS hands out static API keys, another relies on OAuth 2.0 client credentials, and a third enforces hourly refresh cycles. Even within “OAuth-compliant” systems, token lifetimes and refresh rules vary.

Why providers diverge

Each MLS makes choices based on its own constraints. Some prioritize ease of use with long-lived tokens, others enforce frequent renewals to meet security policies, and a few are still tied to legacy infrastructure. None of these approaches are wrong in isolation, but together they create a fragmented ecosystem.

The consumer burden

For data consumers, fragmentation means juggling multiple playbooks. A brokerage pulling from several MLSs may need to maintain daily refresh jobs, handle different token formats, and troubleshoot inconsistent error messages. What should be a solved, background task becomes an ongoing operational burden.

Toward consistency

The industry doesn’t need new technology — just alignment. Standardizing on OAuth 2.1 or OpenID Connect with predictable grant flows, setting minimum token lifetimes, and adopting refresh tokens with clear rules would make integrations dramatically simpler. RESO could go further by offering a federated identity reference model, giving providers a ready-made option and consumers a consistent way to connect.

"Aggregator platforms like Trestle, Spark, Bridge, and MLS Grid try to make things easier by collecting data from multiple MLSs, normalizing it, and exposing it through their own APIs. They help with authentication and central access, but not everything becomes seamless. Each aggregator still has its own specifics, and if you need data from many MLSs, you often have to use several aggregators — which brings some of the same inconsistencies back, just on a smaller scale. The real challenge now is getting uniform behavior across both MLSs and aggregators." - Alexey Kolokolov, Senior Software Engineer, PropTech Project

Conclusion

RESO has taken the industry far by replacing fragmented RETS feeds with a shared Data Dictionary and Web API, creating a foundation for interoperability that didn’t exist before. But in practice, compliance still varies, and the gaps show up in costly ways: providers juggle local extensions and divergent auth schemes, while consumers are left normalizing inconsistent feeds, deciphering vague errors, and managing multiple token and pagination quirks. The standards themselves are strong — what’s missing is discipline in how they’re applied. Stricter enforcement, clearer documentation, and harmonized practices would close the gap between “technically compliant” and “truly interoperable,” reducing friction for both providers and consumers and delivering on the original promise of RESO.

.png)

.webp)

.avif)